A personal DIY project

Disclaimer:

1 Is it a worthwhile exercise?

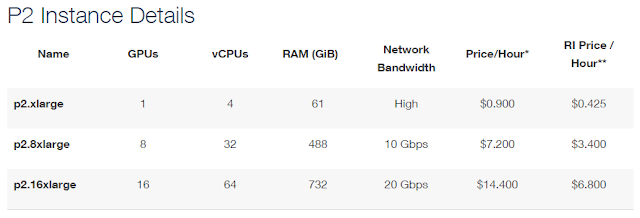

Data scientists working outside well-funded labs find a hard time to gain access to a GPU accelerated computing system. One of the (allegedly) popular option is to use AWS EC2 p2 instances. Let us have a look.

The prices start at around USD 8000 and can go up to ..., for example, check NVIDIA® DGX-1™ (well, it is actually a server) that costs $125K upward!

Clearly, there is a good case of building your own, small data science and deep learning workstaion.

2 How does GPUs help in deep learning

Nevertheless, despite their individual simplicity, a large number of SPs can be extremely suitable certain types of computations, namely large matrix multiplications. Fundamentally, this involves a lot of multiplications of pairs of numbers and then adding the results in certain order. In a CPU, even for multi-core/threading, multiplication of each pair of number in each thread will involve (1) fetching the numbers from memory (if lucky, from cache), (2) multiplying them and (3) writing the result back to memory ... this will go on and on. Even with say 20 cores/threads it will be a helluva lot of time to multiply two really large, say 1Mx1M matrices. Unfortunately we face such problems only too frequently in the field of scientific computations. They can be accomplished in GPUs much more efficiently in the manners depicted in CUDA toolkit documentation:

However, these are intended for graphics programmers and designed to male their life simpler. Thus the APIs are designed to work with graphics objects and operations. They allows (just for illustration) stuff like: create a triangle T, rotate T by ..., add texture TX to T , ..., etc. Clearly that is not going to work for others. We need a high-level language interface for general-purpose GPU (parallel) programming. NVIDIA, for its CUDA-based GPUs have just that, a development toolkit, consisted of (1)an extension of C language that can use a set of (b) general-purpose APIs and (3) a good compiler.

So, it is easy to see how GPGPU capability can support deep learning. Fortunate for us, most of the time we need not develop our code using CUDA toolkit directly. There are libraries availables in almost all of the popular languages which help us develop our deep learning code in our favorite language and takes up the burden of converting it to GPU executable.

3 Design issues

An interesting build case-study is available at Analytics Vidya blog. Here I intend to go little deep and somewhat pedantic . Here our discussion will attempt to help resolving the following issues:

3.1 CPU

Like everything in computing, PCIe has gone through several versioning. Here is the chart from Wikipedia page

Now, in the i7 family the stuff is little convoluted. At this moment, the consumer processors are into 7th generation – launched i7-7700 and i7-7700K only till now. These have (among other specs) 4 Cores, 8 MB Cache, 16 PCIe lanes and supports up to 64 GB memory over dual-channel. For our purpose, they are not very suitable.

3.2 GPU

GPU is the single most important and (possibly) costliest component in the system. You may dream about Tesla K80, but do not speak aloud unless your deep-pocket organization is strongly backing the build. We have to settle for less, but which of them? Tim Dettmers provides a nice overview of GPU usage for deep learning. His choice analysis shown in Table 1. Table 2 shows prices of 3 next-level, but still cutting-edge GPUs.

Multiple GPUs in parallel:

What will happen if I throw in more than one GPUs? NVIDIA offers a multi-GPU scaling/parallelizing technology called Scalable Link Interface (SLI), which can support 2/3/4-way parallelism. It seems to work well for some games, especially those designed to take advantage of it. At this time, we do not know clearly about SLI scaling of deep-learning tasks. Tim Dettmers provides some useful pointers – from which it seems that only a few of all deep-learning libraries can take advantage of this. Nevertheless, he also argues that using multiple GPUs even independently, will allow to run multiple DL tasks simultaneously.

3.3 Motherboard (or Mobo for brevity)

3.4 Others

4 Budget Issues

5 My build

5.1 My budget

I am based in India and that has some cost implications. Almost none of the components are manufactured in-country, thus has to be imported and therefore import duty is levied, thus I shall get somewhat less bang for my bucks compared to someone in, say, USA. My strategy is to get a somewhat minimal (but sufficient for moderate-size problems) system up and running and then expanding its capability as required. So let us get down to the initial system budget and configuration.

5.2 CPU

Now, PCIe3.0:x8 has half bandwidth of x16, but it is still nearly 8 GB/s. The question is to ask is whether the x8 connection is enough for deep-learning workloads. Unfortunately, I could not find an exact answer. However, in context of gaming, some test results are available, which found inconsequential (within margin of error) difference in performance between x16 and x8 for PCIe 3.0. Now, whether graphics processing or deep-learning, computationally, more or less, both are multiplication of large matrices. So, we can expect the similar behavior of the system for DL tasks.

Budget impact:

5.3 Motherboard

- Memory

- Expansion Slots

- Storage

- Multi-GPU Support

Budget impact:

5.4 GPU

Budget impact:

5.5 Memory modules

Budget impact:

5.6 Secondary storage

Budget impact:

5.7 PSU

It is imperative that the PSU must comfortably meet the system's overall power demand. I have used an online tool for computing the system power requirement. I have calculated the power requirement for the system with 2 GPUs, 8 RAMs and 2 SATA drives. The details of the calculation is shown 7 in the Appendix. Based on that calculation, I selected CORSAIR CX Series CX850M 850W ATX12V / EPS12V 80 PLUS BRONZE Certified Modular Power Supply. Hopefully, this will be good enough till I try to add the third GPU.

No comments:

Post a Comment